Are "Woke" People More Depressed?

On how to read a scientific paper.

(**Heads up, this post ended up being too long for most email browsers, so try opening it up on the Substack website itself by clicking on the headline! Thanks and happy reading!)

This past week, I came across a headline from the right-leaning New York Post about people like me. Trans people are in the news a lot lately, so this in itself isn’t very common (what can I say, conservatives are just obsessed with us!) but rather than being about one marginalized group specifically, the article was about people who care about marginalized groups, and how apparently we’re all miserable.

The headline gained traction in right-wing online circles for what it says about their political opponents; the supposed conclusion of the study maps onto our cultural ideas of the “angry feminist”, the “annoying SJW”, the “bleeding heart liberal”, et al. This itself drew reactions from left about the poor conclusions one might draw from the out-of-context line. “BREAKING: acknowledging how messed up the world is is upsetting”, that sort of thing.

Since the article was specifically about a scientific study, I immediately knew I had to learn more. After skimming through the original paper, then knew that I had to give my thoughts on it in some long-form fashion. There’s a lot to be said about science media, the ways it’s disconnected from the science itself, the limitations of academic studies, and how we can all be more conscious consumers of media.

So, here’s my review of a Finnish paper about the woke left, as a scientist who happens to be part of the woke left! Along the way, I’ll be giving you some pointers about how to best engage with scientific works!

I’ve already told you Good Practice #1: skip past the sensationalist headline and go right to the source. Usually these media write-ups of scientific articles cite the paper in the first few paragraphs (if they don’t, disregard the whole article immediately).

Write-ups about scientific papers in more mainstream news outlets are pretty common, and they can serve as an accessible introduction to a scientific concept, particularly those who aren’t trained in reading academic jargon or who don’t have access to scientific journals (which is most people). These kinds of news articles can happen either when a scientist approaches the media with a cool finding, which appears to be what happened here, or when a science journalist stumbles on an article they want to talk about. In this latter case, the journalist may reach out to the original authors or even other experts for help with the article; for example, some science journalists in Western Massachusetts know that I’m an expert on plastics, which is how I recently ended up contributing quotes to this article about microplastics from beverage bottles.

It definitely doesn’t hurt to read these media write-ups, but if the article is available, you should see what the paper itself has to say about its findings, especially for highly politically-charged topics like this one. Luckily, this journal seems to be open-access, so let’s dive in! Follow along with the article for yourself at: https://onlinelibrary.wiley.com/doi/10.1111/sjop.13018

The next good practice is to read the paper’s abstract. This is usually available even when the full article is behind a paywall. Be prepared for a significantly less sexy write-up; think “Could This Fruit Cure Cancer???” versus “The fruit-derived protein [insert absurdly long chemical name here] in high concentrations showed moderate performance in reducing the acceleration of melanoma proliferation in these small rodents, p<0.05, n=30.”

In our case, “Construction and validation of a scale for assessing critical social justice attitudes” comes with a long abstract summarizing their methodology and key results. The first number to look at is the sample size, written as “n”. The larger your sample size, theoretically the better the study. For n=4, that’s a just group of you and your buddies, which probably is probably heavily biased and thus doesn’t reflect the general population. For a group of n=5,878, which this study has, that’s a bit better! More on the sampling limitations a bit later, though.

The abstract clues us into another aspect of this paper; it’s actually two studies rolled into one!

Participants for Study 1 (n = 848) were university faculty and students, as well as non-academic adults, from Finland. Participants responded to a survey about critical social justice attitudes. Twenty one candidate items were devised for an initial item pool, on which factor analyses were conducted, resulting in a 10-item pilot version of critical social justice attitude scales (CSJAS). Participants for Study 2 were a nationwide sample (n = 5,030) aged 15–84 from Finland. Five new candidate items were introduced, of which two were included in the final, seven-item, version of CSJAS.

It’s not uncommon for scientists to run a “pilot study”—a smaller version of the study you actually want to do—before running a much larger study, so that you can make sure the larger study would give you the results you want. This paper just so happens to be reporting on both at once, rather than just the larger study, which gives insight to this aspect of the scientific method; iterating on an existing model to refine it over time. Very cool!

In this case, the study’s author, Oskari Lahtinen, author gave a group of 848 people at his university a survey about “critical social justice attitudes”. How does he define this term? Let’s read past the abstract to find out.

[quoting a 2017 definition] “A critical approach to social justice refers to specific theoretical perspectives that recognize that society is stratified (i.e., divided and unequal) in significant and far-reaching ways along social group lines that include race, class, gender, sexuality, and ability. Critical social justice recognizes inequality as deeply embedded in the fabric of society (i.e., as structural), and actively seeks to change this.”

Having critical social justice attitudes (CSJA) can thus be said to reflect a propensity to:

perceive people foremost as members of identity groups and as being, witting or unwitting, perpetrators or victims of oppression based on the groups' perceived power differentials; and

advocate regulating how or how much people speak and how they act if there is a perceived power differential between speakers, and intervening in action or speech deemed oppressive

We can already see a level of abstraction between the paper and the NY Post write-up; “critical social justice attitudes” has a seemingly robust definition, while “woke” is pretty ill-defined and means basically anything Republicans don’t like. If you weren’t aware, the word “woke” started as African-American Vernacular English (AAVE) to mean “stay aware of police violence and other forms of racial injustice”, before being co-opted by the American right-wing to mock the progressive tendency to draw connections between social injustice and its daily implications. The mere fact that a scientist from Finland knows the word “woke” speaks to its far-reaching cultural impact as a pejorative for people on the political left.

In fact, it was the commonality of the term, despite having no robust definition to speak of, that sparked the author’s interest in doing this study:

This is true in Finland as well, where the arrival of a critical social justice (often called “woke”) discourse has sparked much debate in the media (e.g., Koskela, 2021; Parikka, 2022). This debate has been largely data-free and it can thus be considered a worthwhile question to study how prevalent these attitudes are. Thus far, no reliable and valid instrument has existed to assess the extent and prevalence of these attitudes in different populations.

Here’s my first big red flag: there absolutely are metrics for measuring people’s political attitudes, including people’s attitudes toward social justice causes. Take the Pew Research Center’s Ideological Consistency Scale, which uses very similar questions to Lahtinen’s Critical Social Justice Attitude (CSJA) Study. Or, the Basic Social Justice Orientations (BSJO) scale from 2017, which “measures individuals’ attitudes toward the following four basic distributive principles: equality, need, equity, and entitlement”. Or, the Social Justice Scale (SJS) from 2012; the list goes on! I found these simply by Googling “metrics for assessing social justice attitudes”, and I’m sure there are plenty more. People have been trying to quantitatively assess people’s political leanings for as long as we’ve lived in a democracy, so I was surprised that this study felt the need to create a brand-new scale from scratch, pilot study and all.

Looking at some of the items that make up Lahtinen’s Critical Social Justice Attitudes (CSJA) scale, they seem pretty typical of what you may expect in a survey about political attitudes. Remember that the main point of this study was that participants responded to a big survey full of these items using a 4-point Likert Scale ( “rank how much you agree with these statements; 1 = “completely disagree,” 2 = “somewhat disagree,” 3 = “somewhat agree,” and 4 = “completely agree.””) and then responded to questions about their anxiety, depression, and happiness to see if/how their CSJA scores correlated with their mental health.

The creation of a new CSJA scale makes it all the more interesting that the author used some very well-established scales for evaluating participant mental health. Specifically, he used metrics for anxiety (the GAD-7 generalized anxiety measure), depression (the Finnish modification of the two-factor revised Beck depression inventory, or R-BDI), and happiness (items from UN's World Happiness Report). The GAD-7 is one you’ve probably filled out for every doctor’s visit in recent memory:

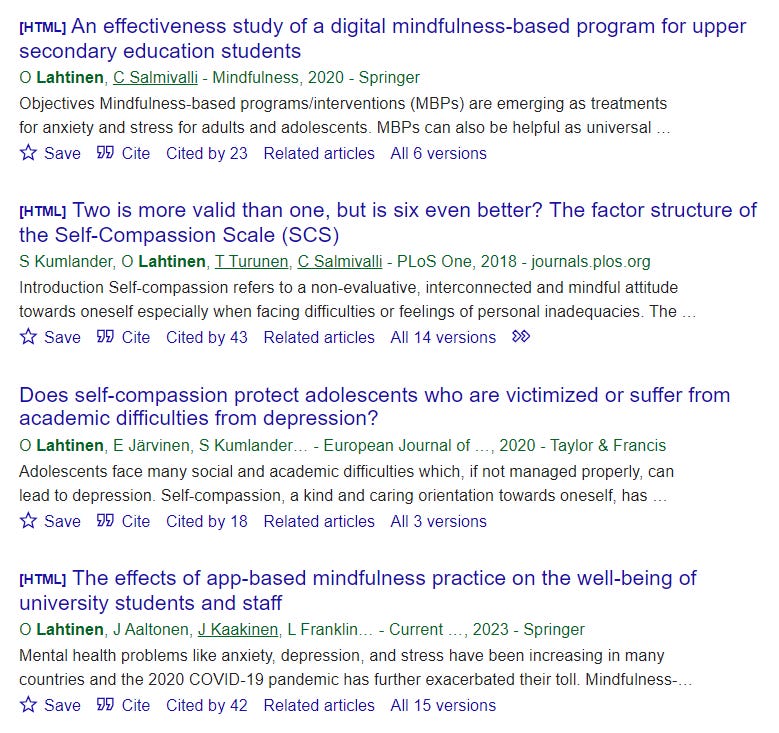

This brings us to the next big lesson in reading scientific articles: look into the authors who wrote it. In particular, see where their expertise is, how many other studies they’ve published, and with whom. A quick Google reveals that Oskari Lahtinen is an ice hockey player…okay, maybe it’s not that Oskari Lahtinen. Clicking on the author’s name in the article’s webpage shows that he’s only written one article for a journal under the Wiley Online Library: this article. Uh oh. Punching his name into Google Scholar shows us some of his other work, with titles like “An effectiveness study of a digital mindfulness-based program for upper secondary education students” and “Does self-compassion protect adolescents who are victimized or suffer from academic difficulties from depression?”

After poking around some other search results, it dawned on me: This guy isn’t a political scientist, he’s a psychologist. This explains why he was very familiar with ways to assess anxiety and depression, yet had no clue about the exhaustive body of work regarding how to assess people’s political opinions.

To be 100% clear, I’m not saying this study is utterly useless. I am not Cancel Culture-ing psychology researcher Oskari Lahtinen, PhD. I’m simply expressing the limitations of this research study in assessing what it set out to. The reason we used well-established metrics for assessing political opinion is because a metric that’s been validated by hundreds of prior studies is probably more precise than a brand new, unvalidated study. The “CSJA scale” has only been validated by one study—this one—meaning that it can’t be taken as seriously as other studies with a higher sample size.

Allow me to point to the chart: the diagram below explains why we should take well-established metrics and studies more seriously than any individual paper, even (and especially) if it validates our existing beliefs. The paper we’ve been talking about today is a “cross sectional study”, meaning that it looks at a specific population at a single point in time (i.e., the time of the survey). Above that would be, for example, a cohort study, where the same participants were surveyed a few months or years down the line to see if their attitudes have shifted, perhaps with some intervention like giving the “woke” crowd some mindfulness exercises. The highest level are meta-analyses, which are basically papers that summarize the findings of hundreds or thousands of other papers to see what they have in common (side note: this is how 1 paper saying the Earth is cooling down gets over-reported on compared to the 999 papers showing the Earth is warming up, propping up climate denial!)

We don’t need to look far to find the study’s limitations, though. In fact, we only need to scroll to the bottom of the page! The “Limitations and future directions” section states some valid reasons to give pause on these findings, including:

“169 out of 848 respondents (19.9%) in Study 1 and 400 out of 5,030 respondents (8.0%) in Study 2 reported they did not understand the meaning of the term “woke”” (oh my god?????)

“the wording of a scale item (CSJAS6) had to be corrected after the studies and there was an error in the Finnish grammar of CSJAS23 that may have affected responses to it”

“study respondents were somewhat more educated than Finns are on average, due to having samples from a university and based on the readership of the Helsingin Sanomat newspaper”

“due to questionnaire length concerns, depression in Study 2 was measured with a single item. Ideally this study would have employed the R-BDI as Study 1 did, and the single item measure may have compromised reliability of the depression scores in Study 2”

…And, obviously, the study was done in Finland, so it’s unclear if the results of this study can be generalized globally.

But okay, this study is still pointing us in the direction of some kind of truth. As a matter of fact, the “well-being gap” between liberals and conservatives is well-documented and has been for literal decades. Take this 2022 meta-analysis of 12th-grade students (n=86,138) which allowed students to self-identify their political affiliation and found that the rates of depression for liberals has been consistently higher than conservatives for years, with depression rates among women being higher than those of men:

There are many complicated reasons for this—chief among them being, yes, being constantly aware of how thoroughly messed up the world is does make you kind of anxious—but this essay is already long enough. So I want to end on two things.

For one, Lahtinen has actually seen the virality of the story in American right-wing circles, and has responded to it on his own website! It’s a very rare treat to see a scientist engage with the public like this, so if you want to hear more about his approach to the study, check out his blog post here!

Lastly, I’d like to point out that the reason the Right is obsessed with pathologizing left-wing ideology—spreading news that we’re anxious, depressed, unhappy, etc.—is that it’s a proxy for saying that we’re wrong. That we would be happy if we simply ignored society’s ills. That our depression is a sign that leftism represents some incongruity with the “natural order” of things. Many Black authors like Ismatu Gwendolyn and Ayesha Khan, PhD (who you should subscribe to, by the way) have argued that psychiatric diagnoses are a tool of the oppressor.

Psychiatric mental asylums were created to lock up people who rebelled against white male authority to coerce them into slave labor for the state and psychiatric diagnoses were developed in this context. “Draeptomania” was one of the earliest mental disorders fabricated as a disease of “insanity” solely afflicting Black slaves who escaped while Hysteria was a disease afflicting “unhappy miserable women” who did not serve “good husbands” well (i.e. an outcome of their forced domesticity). -Ayesha Khan, “Psychiatric diagnoses and the DSM are a sham” (Substack)

When you rebel against society’s norms, you get labeled as “crazy”. I guess I’d just rather be crazy than complicit.

Currently Reading

Speaking of data science, we’re starting to see some insights from the 2022 US Trans Survey! Among other things, it showed that transitioning had a 94% satisfaction rate. *TV ad voice* Try transition today!

I was just added to a list of other queer Substack authors! Check out the Qstack list here. I’m very grateful to be a part of this network! 💖

Watch History

An excellent video on why transphobia hurts cis women, too, particularly Black cis women.

A hilarious reaction to a Dhar Mann video about homophobia. To pick yourself up if you’re feeling some of that lefty depression!

An analysis of time travel media that focuses on Black characters.

Bops, Vibes, & Jams

I continue to be obsessed with Remi Wolf! Her new song “Cinderella” is the perfect funky pick-me-up.

I’ve been getting back into physical media lately, and as we come upon the 10-year anniversary of “To Pimp A Butterfly”, I went and bought myself a copy on CD. Pop this back on if you haven’t in a while!

And now, your weekly Koko.

That’s all for now! See you next week with more sweet, sweet content.

In solidarity,

-Anna

Anna Marie, this was spot on. I miss having access to more scholarly journals from my college years. Any tips on how folks can access those without school credentials?